Background

Forestry Tasmania is required (via Clause 77 of the Tasmanian Regional Forestry Agreement) to make available a minimum annual hardwood veneer and sawlog yield of 300,000 cubic meters. It also exports many millions of tons of woodchips per annum. These wood products must be produced on a long-term sustainable basis. Given that the majority of Tasmania's forest estate is comprised of "native forests" (the vast majority of which have been logged over the past 200 years), what is "sustainable" is a multifaceted problem; Oracle Spatial provides a key solution toward the achievement of a measurable and transparent answer to the "sustainability" question.

The problem starts with "measuring" the forest estate. This involves the generation of unbiased statistical samples (an Oracle Spatial process that was not mentioned) which field crews are required to find (GPS), measure and record (in a fully integrated Oracle Spatial database). From these measurements, and other spatial and non-spatial input data sources, the growth of the forest is simulated over time using documented and published peer-reviewed methodologies and algorithms.

Osborn's talk covered the use of Oracle Spatial in generation of inputs to the simulation algorithms. One of the critical input factors into the simulation is the effective and efficient geo-processing of multiple polygonal datasets. This geo-processing generates new data which are then used in the complex linear programming (LP) algorithms and processes that implement the simulations that calculate an estimate of what is "sustainable" with respect to the production of wood products. Thus the geo-processing provides the (non-spatial) simulation algorithms access to data relationships that come by virtue of them being spatial data. (This is what is known as Waldo Tobler's First Law of Geography: That "everything is related to everything else, but nearby things are more related than distant things.")

Implementation

Traditionally, the provision of these inputs has been done via batch, external and disconnected geo-processing inside file-based GIS software (for example the excellent IDENTITY command in ArcInfo Workstation). The problems with this approach are legion: the most important of which is the asynchronous, laborious and manual process of extraction, processing, re-loading and re-linking the geospatial component of the database. The goal of the project was to see if it could deliver the spatial inputs to the simulation through fully integrated processing that required no external manual intervention (i.e., completely self-contained Oracle based processing).

To add further complications, a stated goal of the processing was to use a single SQL SELECT statement for all geo-processing regardless of the scale of the inputs. Thus it was expected that the SQL statement encapsulating the geo-processing had to be used for simulations over a single area of a hundred hectares, thousands of hectares represented by various "administrative" groupings (e.g., "districts") and even 100% of the forest estate (logically the whole of Tasmania)! As Osborn's opening slide put it, the goal was:

This was a tall order that would daunt most developers and geospatial professionals for a variety of reasons. For example, it is a generally accepted norm for database processing that index-based table searches (such as those required for B-Tree based attribute searching) are not efficient when the resultant set is large in relation to the set of all objects in the table (this is often referred to as index "selectivity"). This was an issue with earlier versions of Oracle Spatial's RTree indexing, but recent optimizations in 10g have provided some alleviation of this bottleneck. Also, geo-processing using small, uncomplicated areas, can alter radically when much larger and more complicated areas are used.

Let's Start at the Very Beginning…

Initial testing confirmed the commonly held view that Oracle Spatial based geo-processing, regardless of all other benefits, would be too slow. The initial, naive, SQL statements constructed to do the geo-processing, ranged from taking hours to many days to run.

Initial (A very common, naïve, spatial outer-join from hell!)

INSERT INTO Target_Table

( ou_id, ou_gis_id, ta_id, ta_gis_id, Shape )

SELECT ou.id, ou.gis_id, ta.id, ta.gis_id, Intersection(o.Shape, t.Shape)

FROM Target_Area ta,

Operational_Unit ou

WHERE ta.gis_id =

AND ou.gis_id = ;

(All SQL statements in this report have had specific implementation details removed to aid readability.)

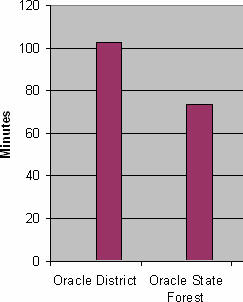

Tuning of this initial SELECT statement involved some pretty standard SQL optimizations that, for small scale areas, resulted in multi-day queries processing time dropping to a few hours. Osborn showed a slide which had a single (tuned) "overlay" (one SQL SELECT statement for a single administrative area – 1/5th of the state's total area) taking 100 minutes (a bit over an hour and a half) to process.

After

INSERT INTO Target_Table

( ou_id, ou_gis_id, ta_id, ta_gis_id, Shape )

SELECT ou.id, ou.gis_id, ta.id, ta.gis_id, Intersection(o.Shape, t.Shape)

FROM Target_Area ta,

Operational_Unit ou

WHERE ta.gis_id =

AND AnyInteraction(ou.Shape, ta.Shape) = TRUE

AND ou.gis_id =

AND Intersection(ou.Shape, ta.Shape) contains an area.

The Need for Speed – Rethink the definition

Even this major performance improvement was considered to be sub-optimal. (If you ask a user: "How fast is fast?" the standard response is: "Not fast enough!").

Osborn went on to describe how a simple redefinition of the area being processed could result in the query time dropping to around 75 minutes. This is easy to achieve in databases through changes to the logical – and thus, physical – model held in the database.

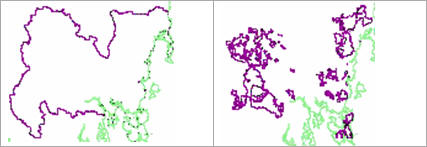

For example, a "district" level simulation was using the administrative area that defined the geographic extent of that area. But the actual amount of state forest under management was far smaller as the following images show (the green is the coastline, the purple a "district" boundary).

|

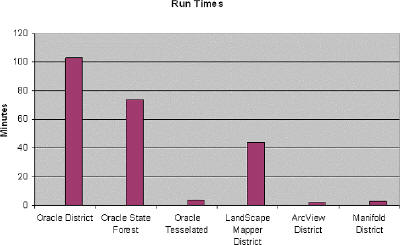

Thus "district" processing means "processing all state forested areas within a district" – using the latter in the geo-processing instead of the former had significant performance benefits. This was implemented across the whole of the state via simple changes to the data model. The speed difference can be seen in the following graph.

|

Why stop there if more speed is possible?

The power of Minimum Bounding Rectangles (MBR)!

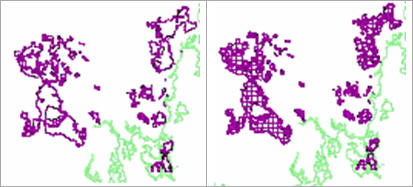

Spatial computations, such as intersection, union, clipping etc, of spatial data are computationally expensive, especially when processing very large polygons as in this application. Knowing that most search processes for spatial data are based on minimum bounding rectangle (MBR) indexing and query, the development team decided to see what effect implementing a simple "tessellation" of the geo-spatial data via a simple rectilinear grid would have on processing time.

In Osborn's trial, each administrative district's forest estate was broken into parts by a preliminary overlay with a simple rectilinear grid (a map sheet index). Visually this can be shown as follows:

|

The changes to the database schema were minimal: all that was required was the introduction of a "many" table such that the original table's spatial description was stored as a set of tessellated objects. The following data model shows the tessellation from a spatial data, data model and SQL perspective.

|

SQL Before (As shown above)

INSERT INTO Target_Table

( ou_id, ou_gis_id, ta_id, ta_gis_id, Shape )

SELECT ou.id, ou.gis_id, ta.id, ta.gis_id, Intersection(o.Shape, t.Shape)

FROM Target_Area ta,

Operational_Unit ou

WHERE ta.gis_id =

AND AnyInteraction(ou.Shape, ta.Shape) = TRUE

AND ou.gis_id =

AND Intersection(ou.Shape, ta.Shape) contains an area.

What this SQL says is for a particular class of target area (t.gis_id = ) find me all operational units that have some sort of geometric interaction (filter: AnyInteraction()). But for those that do interact, return only those with a specific identifier (o.gis_id = ) and whose actual interaction includes an area. (Any two areas can geometrically interact and share only a point, a line, an area or combinations of all of them.)

SQL After (the modifications are shown in bold)

INSERT INTO Target_Table

( ou_id, ou_gis_id, ta_id, ta_gis_id, Shape )

SELECT ou.id, ou.gis_id, ta.id, ta.gis_id, Intersection(o.Shape, t.Shape)

FROM Target_Area ta,

Target_Area_Shapes tas,

Operational_Unit ou

WHERE ta.gis_id =

AND tas.id = ta.id

AND AnyInteraction(ou.Shape, tas.Shape) = TRUE

AND ou.gis_id =

AND Intersection(ou.Shape, tas.Shape) contains an area.

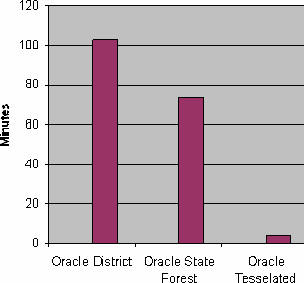

As you can see, the model change required only the most trivial of changes to the original SQL SELECT statement. However, the change resulted in the processing dropping from 75 minutes to around 3 minutes as can be seen in the amended chart!

|

Osborn went on to compare this to what a traditional "fat client" GIS package would be able to achieve. The resultant slide is included so that the reader can see how good the Oracle Spatial based solution was if only speed was the determinant of "success."

|

The value-add that Oracle Spatial geo-processing adds to this process is its ability to deliver its outputs to the LP programs in the fastest, most efficient and least complicated methods possible.

Conclusion

Osborn's presentation showed that with some simple rethinking of the problem and processing techniques, "within database" spatial geo-processing is possible without the need to use an external GIS software package. If you are interested in more information or implementing such techniques within your organization, please feel free to contact me.