Ed. Notes: The Center for Geospatial Intelligence at the University of Missouri, Columbia is, at its core, a multi-disciplinary environment designed to produce people who are trained in the collection, processing, and refinement of geospatial intelligence, as well as being knowledgeable in the related technologies and disciplines. It is lead by Dr. Curt Davis, (Croft Distinguished Professor), has a number of substantial research programs, and is working on several projects for the NGA and others. It has also recently received a grant of $1.75 million included in the FY06 Defense bill.

This article is about a research project to expand the scope of unmanned aerial vehicles (UAVs), and is reprinted with permission. It originally appeared on the organization's website. It discusses creating the mechanisms to support multiple geo-registered video streams and pushing the envelope for gathering and processing geospatial intelligence, on the fly and in real-time.

Real-time video images and videos have become an increasingly important source of information for remote surveillance, intelligence gathering, situational awareness, and decision-making. With airborne video surveillance, the ability to associate geospatial information with imagery intelligence allows decision makers to view the geographic context of the situation, track and visualize events as they unfold, and predict possible outcomes as the situation develops. Airborne video surveillance (AVS) technology is critical to achieving post 9-11 requirements for more ubiquitous and persistent surveillance.

High-resolution target tracking and recognition capabilities are not provided by current UAV systems (e.g. Predator, shown below), which are large in size and must fly at medium or high altitudes, thereby degrading the spatial resolution of the captured video. In addition, the cost of current UAV systems is prohibitive for widespread deployment and use.

|

Recent technological advances such as hardware miniaturization of sensors, electro-mechanical control, aerospace design, and wireless video communication, have enabled the physical realization of small and lightweight UAVs. We refer to this type of UAV as micro-UAV (M-UAV). Compared to conventional UAV systems, M-UAVs have the unique advantages of low-cost and rapid deployment. Their flexibility and ability to fly at low altitudes facilitates the collection of detailed video information for target tracking, identification, recognition and tracking. A great deal of progress has been made in the physical design of M-UAVs and in the development of vision technologies for autonomous flight control. However, the deployment and control of a group of autonomous M-UAVs for large-scale video surveillance, situational awareness, and target tracking remains very challenging.

|

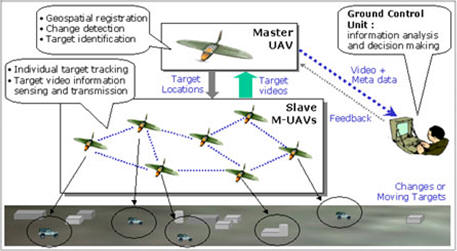

We are presently pursuing a number of research activities aimed at developing system-level M-UAV technologies necessary for low-cost, flexible, and rapidly deployable AVS applications. First, we are examining a number of collaborative system architectures and enabling technologies that allow a group of M-UAVs to perform large-scale persistent video surveillance. One such architecture is illustrated below.

|

In this proposed architecture, the master UAV is larger in size, flying at a higher altitude and guiding a group of slave M-UAVs that can fly at much lower altitudes. The master UAV has sufficient computational resources for real-time geospatial registration and is able to communicate with the control center over a wireless channel. After precise geospatial registration and global motion compensation of its camera view, the master UAV identifies and selects both static and dynamic targets for more detailed surveillance. The master UAV distributes the geospatial locations and visual target context to the slave M-UAVs, directing and guiding them to track each target.

The slave M-UAVs, flying at lower altitudes, track and lock on the target(s) within their camera view to capture detailed video information. After highly efficient video compression, the slave M-UAVs forward the compressed target video information to the master UAV. The master UAV multiplexes all the target video information from the slave M-UAVs and the geospatial location metadata of each target, and forwards these to the control center for automatic or semi-automatic (with human assistance) target recognition, information analysis, and decision making. The feedback from the control center to the master UAV specifies the targets of interest for high-quality surveillance video from the slave M-UAVs, and requests the wireless network to provide increased error protection levels for the user-selected high priority video streams.

The proposed collaborative M-UAV surveillance framework has several unique advantages.

- The master UAV flies at a higher altitude, having access to a rich set of global image features that facilitate precise geospatial registration.

- The surveillance tasks are well partitioned and shared among the UAV system. The master and slave M-UAVs collaborate with each other, fully exploring their specific capabilities and efficiently utilizing the available system resources.

- The proposed hierarchical architecture simultaneously achieves both global imagery intelligence at large scale and detailed video surveillance at fine scale.

- The collaboration framework, the inter-UAV communication capability, and the awareness of geospatial locations allow the UAV system to perform joint flight control, navigation, formation flying, and mission planning.

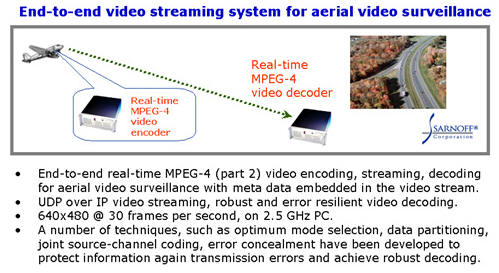

Given the limited resources (power, weight, etc.) available to M-UAVs, an important enabling technology for collaborative M-UAV systems is digital video compression. International video compression standards, such as MPEG-2, MPEG-4, and MPEG-4 Part 10 (also named H.264), are designed and optimized for entertainment and consumer electronics applications. In typical entertainment videos, the random motion of video objects dominates the video scene. However, aerial surveillance video collected by M-UAVs exhibits very strong global motion. This characteristic, unique to AVS, can be used to significantly improve video coding efficiency. In previous work, we have designed an end-to-end MPEG-4 Part 2 encoding and decoding system for aerial video surveillance and evaluated the performance of global motion estimation (GME). The study demonstrated that GME and prediction significantly improves the coding efficiency, reducing the video data rate by 30-40% for AVS.

|

We are building on this research to develop low complexity collaborative GME algorithms for the slave M-UAVs to achieve real-time MPEG-4 video encoding. We are presently investigating two major approaches. First, the target positional information provided by the master UAV can be used as a starting point for the GME. Second, since the slave M-UAVs are flying in formation, there is strong correlation between their navigation parameters. We are exploring this correlation to develop a joint low complexity GME algorithm for the slave M-UAVs.

Reprint by Permission - Center of Geospatial Intelligence, University of Missouri, Columbia