The University of California Division of Agriculture and Natural Resources’ Lindcove Research and Extension Center has deep roots in California’s citrus industry. Spanning from the San Joaquin Valley –– where 75 percent of the state’s citrus is grown –– to the Sierra Nevada foothills, the 175-acre LREC has been a critical link between cutting-edge citrus research and growers since 1959. More than 500 million citrus trees in California have been produced from disease-tested budwood that originated from Lindcove.

California, citrus is gold. Valued at more than $2 billion annually, the citrus industry is a bedrock of the state’s agricultural economy and the lifeblood for more than 3,000 growers who farm 320,000 acres of citrus.

It’s a good bet that the root of any given tree on one of those farms can be traced back to the LREC, which today manages nearly 600 tree crop varieties –– mostly citrus species –– of mixed ages and sizes that are nurtured by soils and climate aligned with those of the commercial citrus growing region in the San Joaquin Valley.

Maintaining such an agricultural R&D gem has spurred the LREC’s managers to continually adopt methods and tools that will ensure the crops are available to the hundreds of scientists and educators from all over the world who come for research. One recent innovation was producing a reference tree database of the location and attribute data of 2,912 individual trees using 1-meter imagery from the National Agriculture Imagery Program from 2012. But in 2017, they began to wonder how UAV imagery could benefit them.

At the same time, Ovidiu Csillik, a Romanian postdoctoral research associate at the Carnegie Institution for Science in Stanford who was a visiting research scholar at UC Berkeley’s Department of Environmental Science, Policy, and Management in 2017, and his research supervisor Dr. Maggi Kelly, a professor in UC Berkeley’s Environmental Science, Policy and Management department, were interested in how artificial intelligence and Trimble’s eCognition Object-based Image Analysis technology could be used to map the trees accurately. eCognition is an OBIA information extraction technology that employs user-defined processing workflows called rule sets that automatically detect and classify specified objects and map them.

“Drone imagery, with its ability to provide sub-meter imagery on demand, has the potential to revolutionize precision agriculture workflows,” Csillik said. “eCognition can not only handle the complexity and large data volumes of UAV, it offers unlimited opportunities to use its algorithms to analyze spatial data and identify any object. This study would give us the chance to acquire UAV data as a proof-of-concept alternative to NAIP imagery, and to test the feasibility of using a CNN-OBIA method to automatically analyze the UAV imagery and accurately identify and map individual citrus trees.”

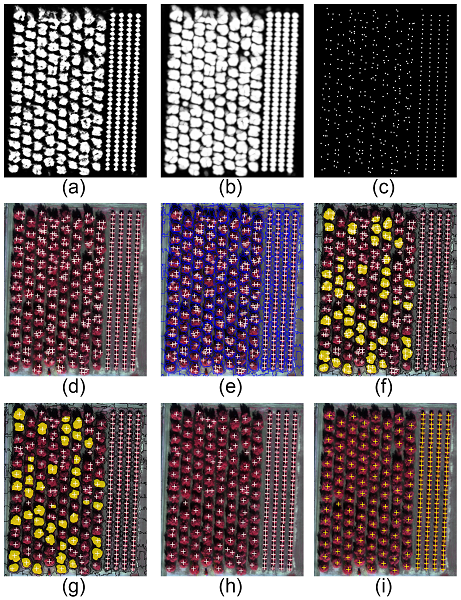

Probability heatmap of tree presence resulted from CNN with values between 0 and 1 (black to white) (a). To reduce the effects of possible noise, the heatmap was smoothed (b) and trees were identified using a threshold of 0.5 (c). The subset depicted here demonstrates the problem of multiple crown detection for large trees (d) that was solved by iteratively segmenting the smoothed heatmap and NDVI layer using SLIC superpixels of sizes larger that 40x40 pixels (e). Multiple crown detection were identified (f) and a single hit per tree was recomputed (g). The results after the refinement (h) better matched the ground reference samples (i).

Citrus at the center

In January 2017, UAV imagery was acquired over the entire 175-acre LREC site. Carrying a Parrot Sequoia multi-spectral camera with average along- and across-track overlaps of 75 percent each, the aerial system flew at an altitude of 104 meters (340 feet) and used Shuttle Radar Topography Mission terrain data to ensure consistent altitudes across the captured area. In two flights, the UAV captured 4,574 multispectral images, which were then photogrammetrically processed to produce a 4-band orthoimagery mosaic with a ground sample distance of 12.8 centimeters. The red and near-infrared bands were also used to create a Normalized Difference Vegetation Index image. Both datasets were used as source data for eCognition.

With the image layers prepared, Csillik could focus on developing the software’s rule set workhorse to process and classify the data. Since they used intensively managed citrus trees at the LREC, there were high diversities of tree characteristics –– pruned, unpruned, different ages, different geometries, touching crowns –– so he needed a rule set that would enable eCognition to delineate the trees under these varied conditions. His approach would enhance the software’s inherent analysis and object-detection aptitude by adding in eCognition’s new deep-learning, convolutional neural network algorithms.

“eCognition is often the tool of choice for identifying and mapping vegetation because it’s so good at extracting certain features and accurately mapping them,” Csillik said. “Adding a CNN model into the software would allow it to go deeper into understanding what is a tree and locate it in the image to produce a more accurate result. I can also add this deeper level of analysis without having to write any code, saving me significant time.”

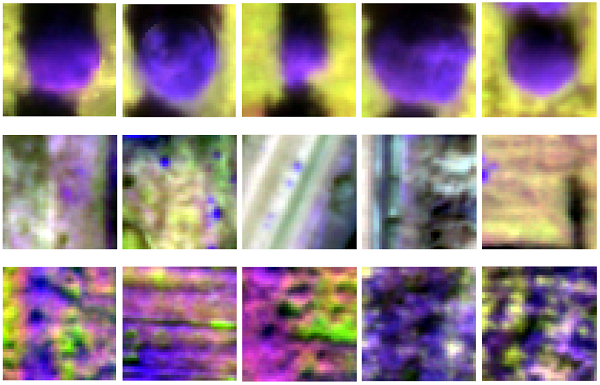

The first step of the rule set involved training the CNN model with three classes –– trees, bare soil and weeds –– with 4,000 training samples per class. To create the samples, he input the 4-band orthomosaic into eCognition, along with the tree-location points from the LREC’s existing tree database, and instructed the software to break up the mosaic into 40-by-40-pixel samples around those points. Sample collection was limited to the northern half of the LREC, leaving the southern half available for validation. Since the classes of the 12,000 samples were known, they could be used to teach the network to differentiate between classes by learning the specific features of each class. A quick study, the CNN completed its training in 13 minutes.

Example of training sets used. Each image is an example of one 40x40 pixel training for: (a–e) trees, (f–j) bare ground and (k–o) weeds.

“The CNN mimics how the brain’s neural cortex identifies certain objects,” said Csillik. “For example, the brain recognizes a car because it has four wheels, windows, doors, etcetera. By showing the same image samples to the CNN thousands of times, it learns what a tree looks like in an image, even if the landscape is more complicated, or the tree is bigger or smaller, or pruned or unpruned.”

Now he was ready to run the second phase of the eCognition rule set: testing the newly trained CNN to identify and delineate individual citrus trees. Focusing on the southern half of the study site, the CNN used each 40-by-40-pixel region to obtain a likelihood that the image patch contains a citrus tree. Moving the analysis region like a sliding window across the UAV-based orthomosaic, it searched for objects that matched the tree features. When it identified a tree in an image, it located it, designated a high value to it and then moved on. In total, it took eCognition’s CNN two minutes to analyze the orthomosaic and produce a probability “heat map,” a grayscale image where higher (brighter) values correspond to likely tree locations.

The software then further processed the heat map to refine the tree objects and extract the tree center. Adding in the Normalized Difference Vegetation Index layer, Csillik used a Simple Linear Iterative Clustering algorithm to segment the heat map and the NDVI layer into another newly integrated feature called superpixels. With this process, eCognition constrains the segmentation towards circular objects in the heat map and constrains it to differentiate between green and bare soil in the NDVI layer, enabling it to remove multiple crown-detection errors and refine its tree delineation, improving the detection and location of single citrus trees. This task took less than 10 seconds. In total, eCognition analyzed, identified and delineated 3,105 individual trees in 30 minutes.

To validate how well the approach performed, Csillik compared the CNN/OBIA-generated results with the LREC’s existing trees database. Using common evaluation statistics, he calculated True Positives (perfect tree identification), False Positives (tree is incorrectly identified; a commission error) and False Negatives (a tree is missed; an omission error). Based on those calculations, the overall accuracy of his debut approach and final classification was 96.2 percent –– an unexpected “nice” surprise.

“Having worked with eCognition for over seven years, I was confident that the integrated CNN would perform well,” Csillik said. “But it was a nice surprise to see how well it performed. With 96 percent accuracy, that level of automated precision could provide the ability to identify and delineate individual trees using automated methods as an alternative to manual delineation. Applying this process to high-resolution UAV imagery would offer a much faster, precise and repeatable method for long-term crop management for growers and orchard managers like those at the LREC.”

Those LREC managers are, indeed, already intrigued by the idea. After seeing Csillik’s and his team’s classified map of citrus trees, they initiated plans to apply the approach to other study areas, explore how transferable it is and determine how easily they can update their tree maps over time.

Csillik would be the first to say that more research on pairing UAV imagery with CNN-OBIA technology is needed to fully realize how this approach can better the precision agriculture industry, but he is optimistic, both about this initial result and future ones to come.

“I think drone imagery analysis is going to be a big part of precision agriculture going forward,” Csillik said. “Since it offers imagery on demand, you can plan the time of day, season, spatial resolution, and spectral resolution you need. Analyzing that data with the speed, intelligence and adaptability of deeper-learning OBIA not only provides a processing environment that can manage significant data volumes, it can readily classify and map field assets down to the individual tree. That’s precision.”

For California citrus growers and researchers, that’s a golden opportunity.