We have seen reports of successful data collection and data processing efforts in the aftermath of Katrina, for example.What is remarkable is that these were often bottom-up initiatives, by individuals or groups of committed geospatial professionals who used their already existing contacts in a collaborative effort to get as much raw (imagery) data processed and accessible to whoever could use it (see Howard Butler's article). Despite this and other positive news, the general feeling seems to be that access to useful geo-data during and after the crisis could have been much better.There should be better access to vector data, either by FTP (file transfer protocol, a standard) or by standards-based Web services that can be accessed from desktop GIS and CAD systems or lightweight Web clients.

The question is: what can we do to prepare for these events? Others have already been pointed out that metadata registries (catalog services) and good quality metadata are of enormous importance.In this article I will look at some of the other challenges.

Heterogeneous data

Geospatial information plays an important role in crisis situations because of its inherent nature: it is information about 'where' (location), 'what' (buildings, roads, water masses, vehicles and other moving objects), 'how many' (people, livestock) and possibly even 'who' (people).This geospatial data can be:

- high-precision (building designs, underground infrastructure of pipelines, cables, sewers) or less detailed (topographic maps)

- vector or raster

- static (one moment in time) or dynamic (tracking information of moving objects, sensor information)

- 2D, 2.5D (terrain models) or 3D

- based on geometry (explicit coordinates) or topology (implied geometry), etc.

Cross-organization and cross-jurisdiction

Data produced or collected by a variety of agencies and companies is in theory interesting and valuable.These might include road data collected at the city traffic department, public records at the city, county or state level, and data from utilities, engineering firms and construction companies.

A company or agency may be using specialized software to organize and manage data files and geo-databases within the organization.These might include Bentley's ProjectWise, ESRI's ArcCatalog, or other kinds of enterprise-wide metadata registries or catalogues.

However, between organizations and agencies, it's a different story. Finding relevant data will often be based on ad-hoc inquiries.Data will only rarely be made discoverable and available in a structured way.There will be cooperation and data exchange between the most involved agencies.But is it adequate?

Queries like 'select all buildings within an area of 1 mile around this chemical plant, and tell me approximately how many people live in that neighborhood' have to be performed in these sorts of situations. Ideally, this query could be formulated in a Web application, and sent to all registered and connected data sources.

Syntactic interoperability

In an emergency, data in many different formats are going to need to be accessed and used.These data are most likely maintained by many agencies and reside on many servers, and the software that is used to process, maintain and publish them will be from different vendors, whose systems may or may not talk to each other.This makes effective information reuse a challenge: combining these data in one multi-source Web client for visualization, query and analysis is not trivial, unless the servers all support the Open Geospatial Consortium's (OGC's) OpenGIS Web Map Service Implementation Specification (WMS).

Fortunately, in the case of vector and raster data, many geospatial software products, can read the native formats of at least one other vendor product (the 'direct read' option).This makes a file-based exchange of geo-data less of a nuisance than it was 10 years ago.On the other hand, for Web service-based retrieval and visualization, other solutions are necessary.Ideally these are combinations of Web clients and Web services from different vendors.A 'closed' Web application, where service and client have to be from the same vendor, is not an option to connect and combine the data of these different organizations and agencies, each with their own enterprise systems. Interoperability based on OGC's Web Service interface standards and, for example, the Geography Markup Language (GML) makes more sense here.

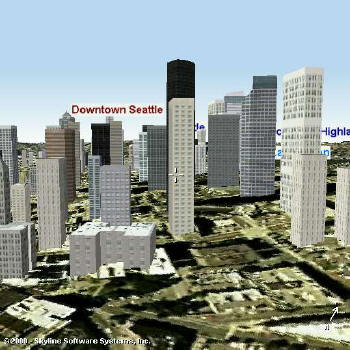

One of the main visions of the OGC is that in order to improve data and software interoperability, there have to be standardized interfaces between the different components (possibly of different vendors) of geo-information systems.The first Web Service standards in the series (WMS, and the OpenGIS® Web Feature Service Implementation Specification , or WFS) were designed to publish 2D geo-information.An easy to use interface is useful for non-expert users that can be used for a 3D visualization of city areas or streets for assessment of damage or simply for navigation and help to find buildings or critical situations.For 3D data publishing there is now the Web Terrain Server (WTS) Discussion Paper.A WTS service responds with a raster image of the 3D data.For example, the WTS service request in the fragment below will produce a 3D scene of downtown Seattle (see Figure 1):

http://any.wts.com/service.asp?server=WTS&request=GetView&version=

0.3&srs=EPSG:4326&poi=-122.3341683,47.61022834,0&distance=1400&pitch=

5&yaw=30&aov=15&width=512&height=512&format=jpeg&quality=medium

A WTS service is a good solution when the purpose is to publish 3D perspective views of a city or a landscape.But for navigation through the 3D scene, for 'identify' operations (getting more information about an object) and for spatial analysis, an actual 3D WFS service would be a better option.

The WFS specification itself does not discuss the dimension of the geometries (2D or 3D).Because the output format of a WFS service is GML, it is rather the GML specification that is important here.GML geometry types allow for x, y and z-coordinates, so modeling 2.5D information is possible in GML.In addition, GML version 3.0 introduced the 'Solid' geometry type, which can be used for 'full' 3D objects.GML 3.0 also offers the possibility of using a topological data structure (a 3D object as a TopoSolid with references to faces, edges and nodes).

|

|

Structural and semantic heterogeneity

The need for syntactic (e.g.using a series of words or commands in a specified order) interoperability in a multi-source and multi-vendor distributed software architecture is addressed with standards-based Web services and vector formats like GML.But this is only the first step toward real information integration.There is also the 'structural and semantic' heterogeneity aspect to solve.

Geospatial data, like any other data, is stored according to a certain conceptual view of that part of reality that is relevant for the organization producing and/or maintaining that data.The purpose of collecting or digitizing that specific set of geo-data will influence modeling decisions.As a consequence, the actual data models (the database schemes) will also be influenced.Names of tables and attributes, granularity (many object types with few attributes, or few object types with many attributes), domain values, etc., all are affected by the environment for which the data will be used.

Apart from differences in data structure, differences in information semantics will also stand in the way of unproblematic multi-source data integration.It is the meaning of words and terms used within a domain that count, and often definitions are nowhere to be found.They are implicit because the users within that organization understand the context.But when data is reused for other purposes or by other organizations, this 'inside knowledge' is not there.

The structural and semantic heterogeneity of the data sources can result in unsuccessful queries and, in the worst case, can lead to inappropriate interpretations.In the case of mission critical and time critical situations, this is highly undesirable.

Methods and time of harmonization / Data model integration

For effective emergency response, it is necessary that the agencies and organizations potentially involved start (or continue) working on common data models for their geospatial data.Data model harmonization could be important for a number of reasons.

- To make integrated querying and data analysis over these separate data sets possible (distributed query and analysis)

- For consistent visualization (use the same cartographic representation such as colors, line width and symbology for objects on the map that are conceptually similar, even though the terminology used in the source data sets is different)

- To provide unambiguous metadata for catalogue services meant for discovery of the data sets

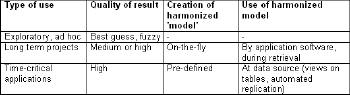

Table 1 gives an overview of possible solutions, depending on the type of application.

|

Many research disciplines are involved in data integration: from computer science and database research to artificial intelligence, the Semantic Web and Description Logics.We can certainly learn from these other disciplines in trying to improve the structural and semantic interoperability of geospatial resources of different organizations and agencies involved in emergency response.

An important part of the process is to 'reverse engineer' the conceptual data models of the organizations involved.The data structure (which feature types, which attributes and relations between feature types) will be relatively easy to establish.It will not be so easy to capture the implicit semantics.It is precisely this aspect that is important for integrated query and processing of the combined data sources.Causes for semantic ambiguity ('same terms, other meaning', 'same meaning, other terms') have to be discovered and managed.

Semantic ambiguity can only be discovered by intensive use of the combined data sources and by feedback of end-users and domain experts. This calls for close cooperation between organizations that are or could be involved in emergency response and recovery.

We need commitment to cooperate, to spend time and money to build this (distributed) virtual data warehouse with geospatial information that can be used during and after emergencies.It should not be left to the moment an emergency arises.There simply has to be time and money spent on building this 'virtual' data store of geospatial information to be used during emergencies.